The woods are still wet from the storm. With each breeze, the trees shudder down droplets, as if remembering the rain, and the surrounding meadow steams lightly in the sun. Everything is buzzing. Black-eyed Susans tilt under the weight of the bumblebees and grasshoppers spring from their hiding spots in the grass.

I’ve decided to try and know this meadow as best I can, so I’m sitting on a bench with my phone pointed at the sky, recording bird songs. I’m smelling the wildflowers. I’m taking photos of leaves and comparing them to illustrations in a local field guide. But descriptions are static, and out here in the meadow, everything changes. Without thinking, I pop open a pod and it reveals a tidy row of seeds as green as new peas.

Oh shit, I think. It’s meadows all the way down.

What draws me to nature is how persistently it defies capture. I could count each blade of grass, every worm, ant, bud, and splat of bird-shit, and still know nothing about this meadow. It’d be about as useful as counting grains of sand on a beach. I’m reminded of the late essayist Barry Lopez, who spent thirty years watching and writing about a stretch of the McKenzie River near his home in Western Oregon.

A true student of the living world, Lopez was confident in little else but the fact that the river would continue to reveal itself over time. A river, he explained, cannot be known, not the way a rocket engine can. Because although an engine is complicated, a river is complex, “an expression of biological life, in dynamic relation to everything around it.” Those who really understand landscape—field biologists, hunting and gathering peoples, artists—prefer specificity: how the light moves on the water on a given day. “This view,” Lopez wrote, “suggests a horizon rather than a boundary for knowing, towards which we are always walking.”

I like Lopez’s model of a horizon, rather than a boundary, for knowing. Our relation to that horizon never changes, no matter how much ground we cover trying to reach it. As any scientist will tell you, the more we learn, the less we know, and this is especially true in biology: for all the data we gather, the dynamics of living systems remain impossible to capture and model at scale. It’s all meadows, all the way down.

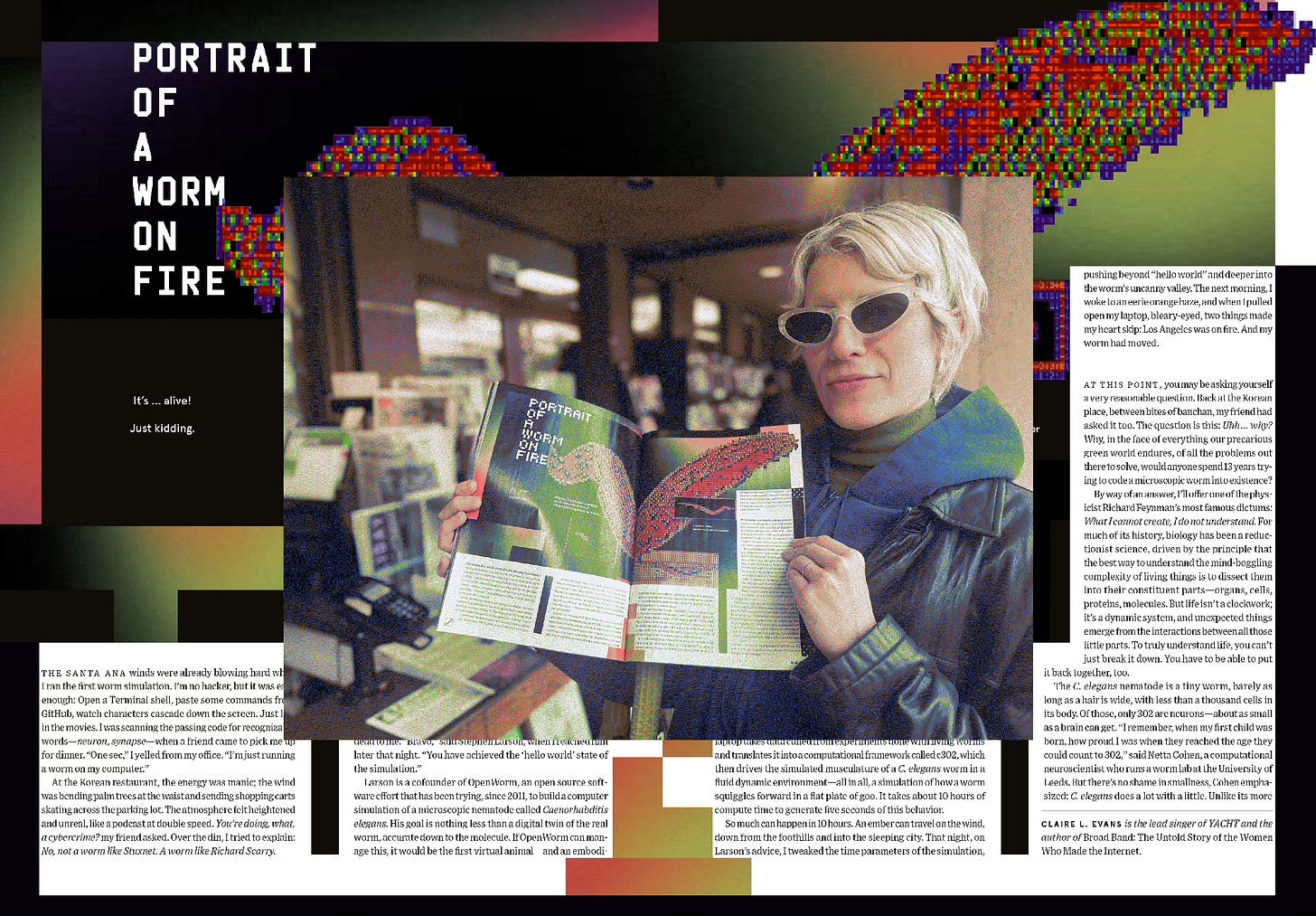

In March, I published a feature in WIRED about an ongoing open-source effort to build a computer simulation of a microscopic nematode worm. This worm is one of the most comprehensively-studied organisms in science; we’ve had a wiring diagram of the 308 neurons that constitute its brain since the late 1970s. And yet every neuroscientist I spoke to despaired at the possibility of ever creating a working model of how those 308 neurons drive the worm’s behavior. They described the undertaking to me as a cathedral—something they don’t expect to see completed in their lifetimes.

I find this, above all, comforting. No need to stress about the coming of Artificial General Intelligence if Artificial Worm Intelligence remains such a distant impossibility. I hope it underscores how mightily naive we are about the possibility of truly imitating life. As I write in the WIRED piece, it currently takes ten hours of compute time to render a single second of a basic mechanical model of a worm inching forward. To make a complete, molecular-scale worm simulation—one that moves backward, too, and more importantly, expresses why a worm does anything—would take at least 10 more years of research, cost tens of millions of dollars, and require data from up to 200,000 real-life worm experiments. That’s a whole lot of effort to pull off what living worms can do with nothing much more than compost and sunlight.

Of course, in science, modeling isn’t usually about this kind of total representation. Instead, modeling is a practice of constructing the false in pursuit of the real. As the molecular biologist Dennis Bray writes, a model is “a way of knowing,” a “symbolic representation that helps us to comprehend the phenomenon.” Like language, models help us tinker with things too complex or all-encompassing to otherwise grasp.

But there are different ways to make a model, and different reasons for doing so. A physicist might reduce the world like an equation, stripping complex systems down to their fundamentals—force, energy, matter, heat—in order to understand them from first principles. She works from the bottom up. The engineer, more practically-minded, models from the top-down. Her objective in modeling nature is to mimic it, borrowing its distributed architecture to design a better internet or streamline a swarm of robots. For the engineer, how a system works matters far more than why. The models used by physicists and engineers can exist fairly independently of the systems they describe, as interesting mathematical toys, or as blueprints for machines.

For the biologist, however, modeling guides empirical study. Her models are bound to the world, driving new questions about life and how it works. She compares computational ant networks to real colonies and mathematical swarms to mobbing flocks of jackdaws. For the biologist, if a model doesn’t help her to understand the world—if it’s not a good fit—it’s as good as useless. This means that when a biological model is uncoupled from the original system it imitates, confusing things happen.

Take AI: machine learning models are simplified abstractions of complex biology, cribbing the architecture of neural networks from human brains. They don’t mirror brains down to the molecule, because (as I hope I’ve conveyed here) there isn’t a computer on Earth powerful enough to do such a thing, and even if there were, such a simulation would be as opaque to us as the original wetware is. That doesn’t stop us from confusing the map with the territory, and conflating the output of these models with intelligence itself. This is dangerous territory for many reasons, not least because it constrains our understanding of what intelligence is, and what forms it may take in the meadows, rivers, and ever-distant horizons of the living world.

In other news, I just got back from Europe, where I was invited to give a keynote talk in Stockholm about some of these ideas—uncomputable worms, complex living systems, ways of knowing—in the context of AI, the ultimate inadequate model. The video of the talk is kinda doing numbers on YouTube, at least by my standards.

Blippo+, the live-action video game I’ve been working on with my friends for five years, is finally out in the world. It’s nearly impossible to explain, but if you’re into pirate television, video art, broadcast cable, Buckaroo Banzai, Star Trek, computer history, and far-out theories about consciousness particles, you will probably love it. It’s currently rolling out as an 11-week collective experience for the black-and-white Playdate console, but the full-color version is coming out for Nintendo Switch and PC in the Fall. You can wishlist the game on Steam if that’s your thing. And if the idea of a “live-action video game” has you scratching your head, I’ve written a longform primer about the history of this maligned genre—a category-resistant hybrid of interactive cinema and cinematic gaming—for PioneerWorks.

Also, I just found out that my piece about lucid dreaming for Noema Magazine won a Southern California Journalism Award for best “Soft News” feature. 🏆

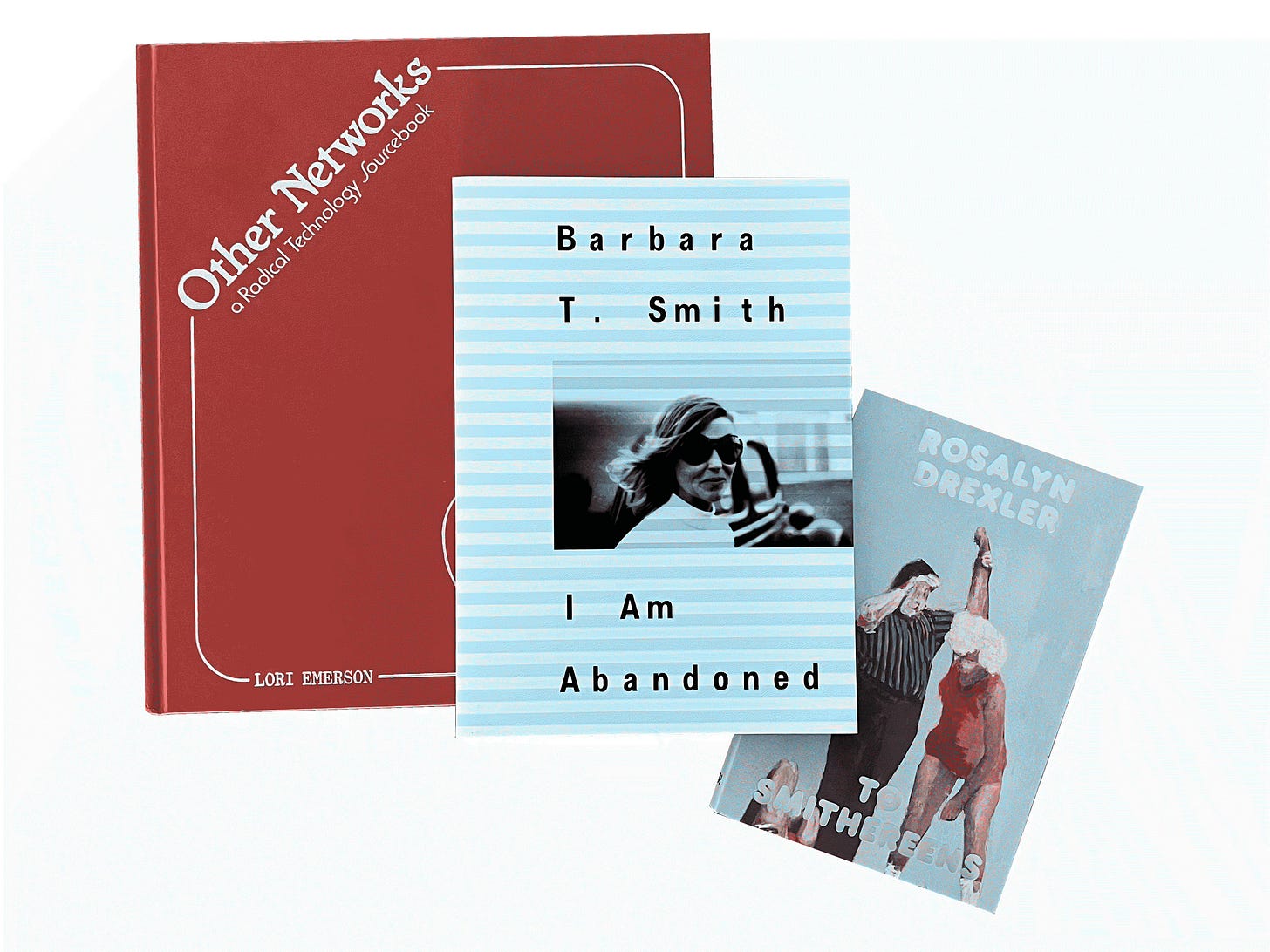

Here are three new books I really like:

Lori Emerson’s Other Networks, a “radical technology sourcebook” of non-internet communications networks—from talking drums, smoke signals and pigeon post to dial-in party lines, Ham radio, and barbed wire telephones. It’s a fascinating compendium of all the ways human beings have contrived to keep in touch with one another, and a vision of a more playful world; Emerson pairs technical entries with examples of ways artists have subverted and tinkered with communications technologies, proof that she fully gets who the real engineers are. Emerson is a founding director of the Media Archaeology Lab at the University of Colorado Boulder, which just seems like the coolest place in the West.

I Am Abandoned, a slim volume from Primary Information books documenting the performance artist Barbara T. Smith’s spicy 1976 staging of a dialogue between two unhinged psychoanalytic computer programs at CalTech. When I was in Europe, I was lucky to catch a fantastic exhibition of feminist computer art at Kunsthalle Vienna; Smith’s piece “Outside Chance,” in which she tossed some 3,000 printouts of computer-generated snowflakes out the window of a Vegas hotel, stole my heart (documentation here, courtesy of the Getty Institute).

Rosalyn Drexler’s 1972 novel To Smithereens. Drexler, a painter, was both a founding figure of the New York Pop art scene and, briefly, a professional wrestler. The two vocations combine in this novel about a scrappy female wrestler and her nebbish art critic beau. A bawdy sendup of the ‘70s Manhattan art world, it quite literally pulls no punches. Long out-of-print, it’s been rescued by Hagfish Books, a small press who sniff out overlooked writers and give them a second chance at adoration (full disclosure: I’m on Hagfish’s advisory board).

Finally, I’ll leave you with a thought from one of the people I interviewed for the WIRED piece—the computer scientist Stephen Larson, founder of OpenWorm:

The way I see it, a lot of a lot of understanding biology is understanding the informational relationships between things. DNA is essentially a little packet of data, of information. What really matters about it is not that it's made of molecules. It's the sequence and the relationship of the molecules to each other. There's a lot of biology that is highly based on information and the way that information works. And so it feels pretty natural, if you're going to go simulate a thing, to say that the aliveness comes from the informational structure more than the material components.

🪱,

Claire